As programmers get better at reproducing behaviors, computers can appear to be more human-like.

Artificial intelligence is the methods and techniques that can make a computer seem human.

This represents a broad spectrum of implementations:

- Makes decisions to play games the way a human does.

- Using language in writing that matches what a person would likely write.

- Using spoken language that matches what a person would say.

- Using spoken language that matches how a person would say it.

- Expresses human-like behavior with facial movements and body language.

- Appropriately responds to other humans’ behavior.

- Engages people in a human-like way.

However, even simple things, such as filling in the text of a sentence you’re trying to write, is technically AI as well.

The most popular way of testing any artificial intelligence is with the Turing Test, also known as the imitation game. It involves a human judge with two candidates (one human and the other a computer) hidden behind a barrier. The judge gets notes passed from both, and has to judge which of the two is a computer.

History

The first type of AI was created in 1965, and was called an expert system. It operated on a set of if/then rules based on a body of knowledge. It works well-enough for things with a limited set of choices and purely logical decisions (e.g., playing chess, “paper or plastic”, some video games).

However, expert systems were absolutely terrible at making decisions with many complexities. It also didn’t learn to detect patterns, and simply did what it was told. The first chatbot was created in 1966 named ELIZA to respond to patients’ questions, but didn’t work very well at all.

Machine learning is the new AI. If a machine learning algorithm is capable of detecting something, it is also capable of reproducing it.

Machine learning, however, is only good for very specific tasks which are highly similar across each iteration:

- Detecting if a photo is of a specific animal (e.g., a cat), which also allows it to regenerate a decent-enough photo on command. It would need re-training for something else, though (e.g., a dog).

- Whether a sentence is appropriate or vulgar, which allows it to generate its own sentences as well. It would need re-training for different writing styles, though.

- Reviewing business contracts for compliance and risk assessment, which can also mean building them as well. It would need re-training for each purpose, though.

- Observing trends across data, which theoretically can allow the algorithm to make future predictions.

Limits

We’re a very long way off from reproducing human behavior. Since feelings are so messy, computers must be programmed to observe events and then appear like they’re reacting to them. This often involves shedloads of calculus and statistics, but will always feel inauthentic to us because it’ll miss idiosyncratic aspects of intuitive context.

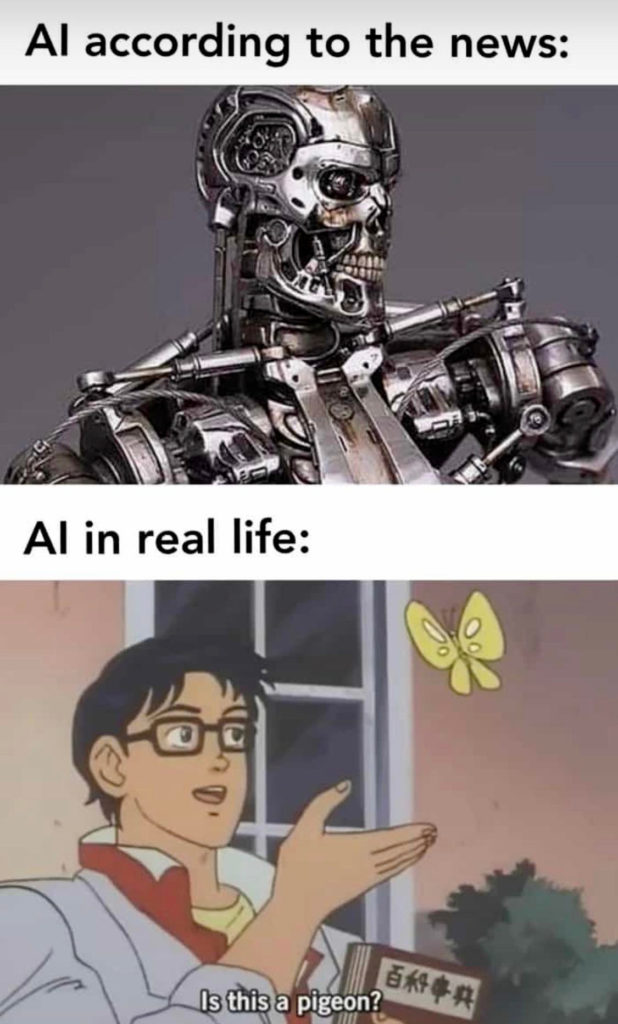

Even with all the benefits of machine learning, AI can be ridiculously stupid, which is heightened by the gap between expectations and reality:

The early 2020’s have seen tons of hype about machine learning “creating” new works, such as music or paintings, but it really isn’t. It’s simply creating neural network rules to define general patterns that exist in those works, then mixes-and-matches them to create something in the predictable range of expected actions. For example, some specific racial/ethnic facial features never mesh together (and we intuitively understand it), so looking very closely at This Person Does Not Exist will show small discrepancies to make most of the photos feel unnatural.

Making computers seem human is a daunting task, but some of the discussion has shifted to the question of how to make computers feel familiar. Computers can answer elaborate, complicated questions instantly, so appearing human requires putting well-timed pauses into the response. Even then, it’s arbitrarily slowing down the system to make it look like it’s thinking with a brain, and it’s still the same dumb computer doing what it was precisely instructed.

Complete Limits

Also, depending on how we view the soul, there’s a hard philosophical “wall” that AI can never breach:

- If the naturalist philosophy (which is common in most atheism) is correct, then the mind, brain, psyche, and soul are synonyms, and theoretically reproducible with enough tweaking. Under this idea, AI that thinks like a human is a matter of time.

- In a rationalist philosophy, the mind is separate from any organic functions of the body, meaning that agency of choice is confined to the domain of religion and can never be reproduced because that would put us on par with a Divine Creator/God.

- The only exception to rationalist philosophy overriding our inability to create life would be pantheist doctrine, which implies we are in some sense God.

Further, if there is a God or gods, the fact that they built our aptitude will likely also mean they thought ahead to not give us the means to create life ourselves until we were fully ready for it.

But, that’s the domain of religion and speculation. As it stands, life is impossible through AI.