Since Silicon Valley’s founding, novelty has reigned over computer technology, mostly because of how exponentially better new computers became over their predecessors.

- Between 1985 and 2000, for example, a standard personal computer was thousands of times faster, and was able to support many other functions like a nicer GUI and better games.

The tech industry is filled with young people. While there are statistical outliers of older people, the industry’s age leans toward people in their 20s, and there are a few reasons for this.

Firstly, by their very nature, young people have a few demographic features:

- They’re often more concerned about money than necessarily a career, simply as a product of maturity, which means they’re expendable.

- Their comparatively smaller life experience means they’re easier to exploit, will typically have a more positive attitude about most things, and are more likely to be loyal to their company.

- A diminished understanding of the world mixed with above-average intelligence frequently makes them think they’re more right than they are, which makes it difficult for them to see common sense.

Second, new technologies are constantly getting developed, used, and outmoded. Trends that would normally take 10–20 years in the rest of the world have shifted within the tech industry in about 2–5 years, for several reasons:

- The abstraction-based nature of computer technology means most software is fire-and-forget once it runs well, so an established technology becomes the starting point for other things. It literally moves at the speed of obsessively precise thought.

- There’s lots of money in the media/information complex.

- All the above-stated young people simply like novelty for novelty’s sake, and their inexperience means they’re less afraid of breaking a known-good system.

So, because of this, the industry is and will always run on cheap, naive, ambitious labor, at least until the money dries up.

The Young Neophiliacs

Anything dominated by young people that’s validated by prior success is almost guaranteed to push heavily against proven things that work. The founding of the USA is a similar story, which proved that a constitutional republic can work (at least for a few hundred years).

The history of Silicon Valley is a standard story of human nature pushing against limits. William Shockley created the Shockley Semiconductor Laboratory in 1955. Only two years later, the 8 leading scientists there (dubbed the traitorous eight) decided to create Fairchild Semiconductor (a division of Fairchild Camera and Instrument) in Mountain View, California. Their efforts, combined with Bell Labs and a few other large players in the industry, created the Silicon Valley culture that’s still at least somewhat present in most tech culture across the world.

Shortly after their development, the philosophy of computers adopted a distinctly postmodern angle, with the idea of human-computer synthesis creating an altogether new way of approaching life. Their aspirations were partially correct, but somewhat grandiose. The ideas can get lofty, and tend to borrow heavily from science fiction:

- Vannevar Bush’s 1960 paper called “Man-Computer Symbiosis” implies that people will universally think and behave more intelligently because of computers. That hasn’t happened (though they do think faster), and we now have an over-abundance of information (which we now must learn to fight).

- People anticipated robots and AI would take over all the jobs. That hasn’t happened yet, and probably never will.

- Some people speculated the internet would remove ignorance and hatred. That, also, hasn’t happened yet, and it likely has gotten worse.

Every time a new technology trend surges into the mainstream, expert marketing makes the product out to cure all of humanity’s problems, up until it proves that it made them worse.

Against most criticism, their attitude is mostly “we haven’t gotten there, but we will soon!” and most of them are die-hard advocates of the Idiot Ancestor Theory.

This new-is-better philosophy hasn’t really changed, either. In 2013, Mark Zuckerberg was famously quoted as saying the motto of Facebook was “move fast and break things”. While he walked it back a year later, the attitude still permeates the culture.

One of the dominant reasons for this idealism comes from the natural design of the computer versus the natural design of nature:

- Nature is inherently messy, with obscure redundancies everywhere, hidden features, additional components, and endless permutations. We don’t understand all of it, and there’s no guarantee we ever will.

- Computers, by design, are built upon logic, which is always perfectly ordered, often well-organized, clean, conspicuous when problems arise, and resistant to arcane changes. Someone is an expert in every part of it, so it’s the art of finding them or their documentation.

- Most computer-based work involves fighting back against the randomness of nature with things like error-correcting code, and only specific implementations of the chaos of nature have any advantageous use (e.g., random number generators, AI). By contrast, nature is essentially chaos, with splashes of order.

Technology’s Downsides

To note, technology itself isn’t bad. Among other things, technology empowers us to perform things that were considered miracles a few short decades ago:

- Regenerative therapy now allows some people with hearing loss to regain their hearing ability.

- The very design of quantum computers is an absurd implementation that uses probability as a constant.

- Assuming there’s existing content similar to it, we can now use AI to auto-generate entire books’ worth of legible content.

- This isn’t even touching on the modern conveniences we’ve normalized that would make a 19th-century futurist green with envy.

Technology makes our lives easier, faster, more convenient, and longer.

The downside of all the above-stated neophilia, though, is that proven practices and sturdy, reliable systems are often overlooked:

- COBOL is a very fast programming language, even while it has many downsides that make it unwieldy for most uses. As of 2020, around 80% of financial transactions are run on it, even though it was made in 1959.

- RSS is a reliable, decentralized, free protocol for sending intermittently updated feeds of public information. It’s also not on the radar of many tech people because it was released in 1999.

- There have been efforts to transform all interfaces to GUI-based, and even to VR-based, but nothing will ever fully replace the simplicity and straightforward nature of text messages and entering commands by typing.

Since the nature of new things means they’re untested, highly influential speakers can grab the industry’s attention, even when they promise the impossible or have no credibility to back what they’re saying. Evidence would take too long to acquire (and they’d miss out on the trend), so they can be drawn in from either hope (in an investment) or fear (in a risk).

Some of these influencers are literally on drugs. From Atari’s famous games, onward through executives that run current tech companies, from LSD to ketamine, the inspiration for the Next Big Thing often comes through copious amounts of substances that disconnect our ability to think in practical terms. Most people don’t know about it because there’s very little incentive for anyone to talk about it, for various reasons.

This “newer is better” attitude misses a key detail as well: non-tech people are more naturally resistant to change, and the trends move slower for all the normal people:

- People were quick to adopt the keyboard and mouse. A touchscreen is a logical step forward, but people will keep using the keyboard and mouse a long time after I write this in the 2020s. When a VR revolution arrives, a flat display screen won’t disappear for a long time after that.

- People still use printers, and most tech people imagined they’d be gone by now. The same goes for tape drives, vinyl records, and camera film.

- “Smart” cars are unwieldy and awkward to use. One of the most egregious UX failures is the removal of control knobs in lieu of buttons on a touchscreen. The design gets worse with a proprietary, stripped-down, garbage-quality OS. To add insult to injury, there’s also often a way to downgrade your vehicle to include knobs again.

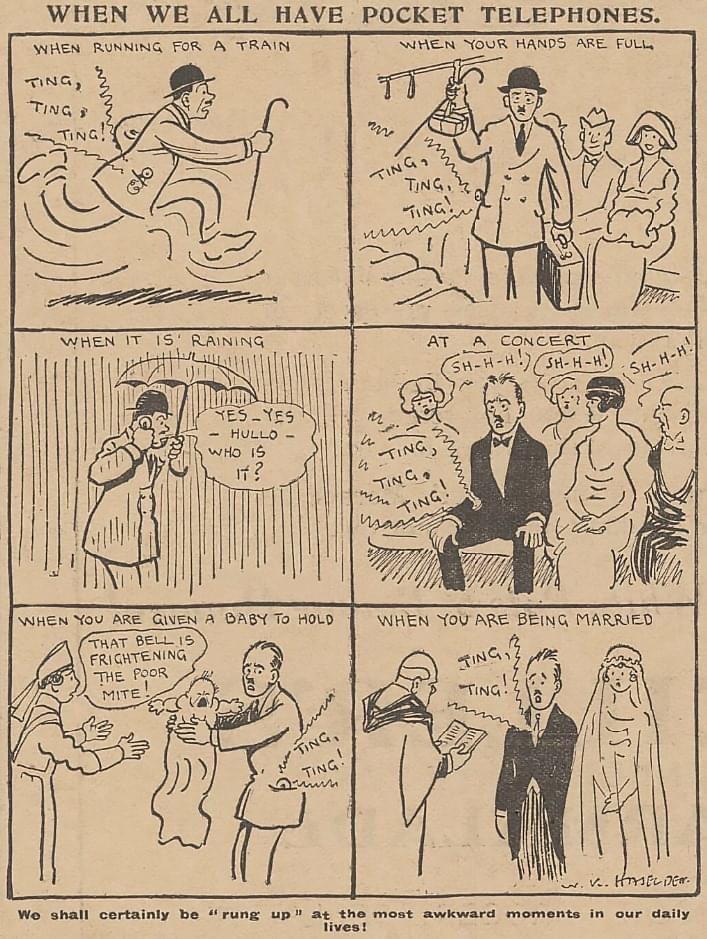

This neophilia isn’t new to computers, either. Since the 1920s, people have wrongly forecast humanity would be rendered obsolete by the rise of horseless carriages, mass production, touch-button panels, and robots.

It’s perfectly reasonable to assume that AI or self-driving cars will yield a similar result to the grand trends of yesteryear, and that the future of DNA programming will be much of the same.

Technology’s Long-Term Downsides

Having a best=new attitude creates constant long-term implications. Very frequently, developers are reinventing the wheel. It’s not uncommon to hear this pattern in descriptions of new technologies on GitHub and Product Hunt:

- “[Older Technology], but faster.”

- “Like [Older Technology], but has [Newer Technology Feature] in it.”

- “A simpler solution for [Problem With An Existing Known-Good Solution].”

Those technologies are typically better, but developers frequently perform tons of rework without considering the best use of their time. Most of their motivation is to become the Next Big Thing, but if they had about a decade of life experience, they’d see the statistical unlikelihood of that endeavor and plan their limited time on this planet more wisely.

The age-old axioms of “Slow is steady, steady is smooth, smooth is fast” and Chesterton’s Fence (don’t remove things without understanding why they were there) are foreign concepts in the tech world. As a result, the flow of potentially useless information inside tech blogs and tech guides is worse than the stock market, and things frequently break because someone didn’t think it was worth extensively testing before shipping.

The irony of this is that many technologies do have tremendous utility, but most often long after everyone stopped talking about it. For example, tape drives are still great at storing lots of information when you don’t care too much about some of it lost (e.g., farm data).

People will spend exorbitant amounts for the latest/greatest/newest/shiniest products, which will last approximately 6–12 months before they must buy more. It may be worth the money for people inside the industry, but it’s an absurdly expensive balance sheet item or hobby for everyone else.

Distorted Expectations

Obsession with novelty, along with perpetually working intimately with computers, can distort a person’s view of reality.

Computers are a unique world unto themselves:

- Updating computers simply requires running pre-made software the developer tested already, the code is effectively identical to what the developer ran, and is frequently basic or invisible to the user. Most updates are presumably good.

- A GUI can look obsessively neat and tidy, so everything can satisfy the obsessive preferences of a computer user. If you don’t like the color of something, that’s often a setting or line of code away from changing, and it’s a rewarding experience to explore it.

- Everything in a computer is logic-based. If something breaks, there’s always a logical reason for it, and the code/hardware has a predictable answer if you look hard enough. Reading documentation is geeky and technical, but effective.

- Every aspect of a computer is clean-cut. Language is articulated, computerized physics are simplified reproductions of reality, and distortions of perception are overlaid on top of the absolute information the computer already understands.

- Everything that’s “default” can be changed with the right programming.

By contrast, reality is messy:

- Updating something isn’t always easy. The very act of updating something is a violation of previously formed habits, and the changes are frequently as destructive as they are helpful. You can’t trust that an update is from a trusted source.

- Obsessively organizing and managing life is almost more trouble than it’s worth. There are always sporks, and it takes lots of time to establish and maintain an organization system. Even then, you might not have room or resources to keep everything immaculately categorized.

- Everything in life is perception-based. When things break, that’s often only a matter of perception. Even the atomized form of reality is bound up in uncertainty, and the primitives of perception itself are bound together with sentiment. We have no manual beyond whatever religion we use, and the various types of documentation frequently contradict each other.

- The physics and sociology that tie to absurdly mundane things (such as boiling water or having a conversation about the weather) are vastly more complicated than most people realize, so predicting precisely is far harder than it sounds.

- Some things are programmed automatically, and can’t be redefined. Death and taxes, for example.

The effects of this show themselves through various pathways:

- Engagement with the “quantified self”, which implies they can find meaning via mathematical approaches like the number of productive hours or results generated.

- Belief that something can be entirely automated, to the point of never requiring the agency of a human being in any capacity.

- A near-worship of technology and its possibilities.

This can create remarkable delusions when tech-minded people try expanding their worldview into the space beyond their computers, especially when Silicon Valley’s is heavily subjected to the Cupertino Effect.

One clear consequence of all this is that most tech people are politically progressive:

- Naturally, if the old-fashioned way of things is inherently inferior, there’s no reason that a simple modern solution can’t fix what everyone’s been complicating for thousands of years.

- And, more importantly, that would mean any new political solution we haven’t tried yet can work to fix humanity, as demonstrated by the models.

Most of them miss the fact that nothing whatsoever under the sun can technically fix the human condition. People will use technology to make life easier in a general sense, but some of them will use that same technology to cheat, break laws, violate the boundaries of other people, and kill them. Alfred Nobel’s and Albert Einstein asserted that dynamite and atomic weapons would end all war, respectively, and it’s just as misguided to view any new technology as the conduit for a new moral condition.

Most prominently, the fields of AI and VR get the most delusional set of expectations attached to it. AI is an attempt to create life, and VR is an attempt to recreate creation itself. A well-trained human-like machine learning algorithm will have all the defects of humanity, and a complete virtual world will have all the defects of the world we presently live in.

It’ll All Eventually Be Boring

The irony of these trends is that each one is individually a flash of popularity, but taken as a whole it’s the same dull propaganda.

Eventually, all of the latest and greatest becomes boring. Right now, we live in a Jules Verne science fiction, but we’re oblivious to the remarkable things we’ve taken for boring.

I could tell you to go touch grass, but that’s its own trending phrase. Instead, I encourage you to explore some of the other wonders of modernity, such as horseless carriages, electrical torches, and automatic scribes.

Further Reading

Awesome Falsehoods Programmers Believe in